Operant Conditioning – 4 Interesting Experiments by B.F. Skinner

Operant conditioning might sound like something out of a dystopian novel. But it’s not. It’s a very real thing that was forged by a brilliant, yet quirky, psychologist. Today, we will take a quick look at his work as we as a few odd experiments that went with it…

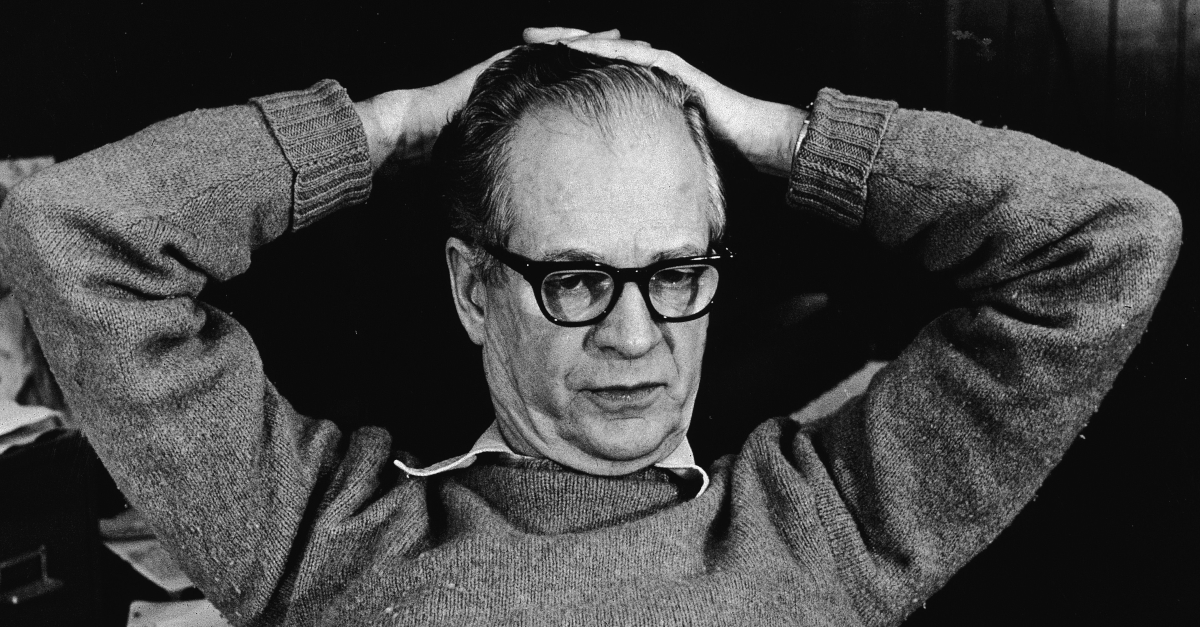

There are few names in psychology more well-known than B. F. Skinner. First-year psychology students scribble endless lecture notes on him. Doctoral candidates cite his work in their dissertations as they test whether a rat’s behavior can be used to predict behavior in humans.

Skinner is one of the most well-known psychologists of our time that was famous for his experiments on operant conditioning. But how did he become such a central figure of these Intro to Psych courses? And, how did he develop his theories and methodologies cited by those sleep-deprived Ph.D. students?

THE FATHER OF OPERANT CONDITIONING

Skinner spent his life studying the way we behave and act. But, more importantly, how this behavior can be modified.

He viewed Ivan Pavlov’s classical model of behavioral conditioning as being “too simplistic a solution” to fully explain the complexities of human (and animal) behavior and learning. It was because of this, that Skinner started to look for a better way to explain why we do things.

His early work was based on Edward Thorndike’s 1989 Law of Effect. Skinner went on to expand on the idea that most of our behavior is directly related to the consequences of said behavior. His expanded model of behavioral learning would be called operant conditioning. This centered around two things…

- The concepts of behaviors – the actions an organism or test subject exhibits

- The operants – the environmental response/consequences directly following the behavior

But, it’s important to note that the term “consequences” can be misleading. This is because there doesn’t need to be a causal relationship between the behavior and the operant. Skinner broke these responses down into three parts.

1. REINFORCERS – These give the organism a desirable stimulus and serve to increase the frequency of the behavior.

2. PUNISHERS – These are environmental responses that present an undesirable stimulus and serve to reduce the frequency of the behavior.

3. NEUTRAL OPERANTS – As the name suggests, these present stimuli that neither increase nor decrease the tested behavior.

Throughout his long and storied career, Skinner performed a number of strange experiments trying to test the limits of how punishment and reinforcement affect behavior.

4 INTERESTING OPERANT EXPERIMENTS

Though Skinner was a professional through and through, he was also quite a quirky person. And, his unique ways of thinking are very clear in the strange and interesting experiments he performed while researching the properties of operant conditioning.

Experiment #1: The Operant Conditioning Chamber

The Operant Conditioning Chamber, better known as the Skinner Box, is a device that B.F. Skinner used in many of his experiments. At its most basic, the Skinner Box is a chamber where a test subject, such as a rat or a pigeon, must ‘learn’ the desired behavior through trial and error.

B.F. Skinner used this device for several different experiments. One such experiment involves placing a hungry rat into a chamber with a lever and a slot where food is dispensed when the lever is pressed. Another variation involves placing a rat into an enclosure that is wired with a slight electric current on the floor. When the current is turned on, the rat must turn a wheel in order to turn off the current.

Though this is the most basic experiment in operant conditioning research, there is an infinite number of variations that can be created based on this simple idea.

Experiment #2: A Pigeon That Can Read

Building on the basic ideas from his work with the Operant Conditioning Chamber, B. F. Skinner eventually began designing more and more complex experiments.

One of these experiments involved teaching a pigeon to read words presented to it in order to receive food. Skinner began by teaching the pigeon a simple task, namely, pecking a colored disk, in order to receive a reward. He then began adding additional environmental cues (in this case, they were words), which were paired with a specific behavior that was required in order to receive the reward.

Through this evolving process, Skinner was able to teach the pigeon to ‘read’ and respond to several unique commands.

Though the pigeon can’t actually read English, the fact that he was able to teach a bird multiple behaviors, each one linked to a specific stimulus, by using operant conditioning shows us that this form of behavioral learning can be a powerful tool for teaching both animals and humans complex behaviors based on environmental cues.

Experiment #3: Pigeon Ping-Pong

But Skinner wasn’t only concerned with teaching pigeons how to read. It seems he also made sure they had time to play games as well. In one of his more whimsical experiments, B. F. Skinner taught a pair of common pigeons how to play a simplified version of table tennis.

The pigeons in this experiment were placed on either side of a box and were taught to peck the ball to the other bird’s side. If a pigeon was able to peck the ball across the table and past their opponent, they were rewarded with a small amount of food. This reward served to reinforce the behavior of pecking the ball past their opponent.

Though this may seem like a silly task to teach a bird, the ping-pong experiment shows that operant conditioning can be used not only for a specific, robot-like action but also to teach dynamic, goal-based behaviors.

Experiment #4: Pigeon-Guided Missiles

Thought pigeons playing ping-pong was as strange as things could get? Skinner pushed the envelope even further with his work on pigeon-guided missiles.

While this may sound like the crazy experiment of a deluded mad scientist, B. F. Skinner did actually do work to train pigeons to control the flight paths of missiles for the U.S. Army during the second world war.

Skinner began by training the pigeons to peck at shapes on a screen. Once the pigeons reliably tracked these shapes, Skinner was able to use sensors to track whether the pigeon’s beak was in the center of the screen, to one side or the other, or towards the top or bottom of the screen. Based on the relative location of the pigeon’s beak, the tracking system could direct the missile towards the target location.

Though the system was never used in the field due in part to advances in other scientific areas, it highlights the unique applications that can be created using operant training for animal behaviors.

THE CONTINUED IMPACT OF OPERANT CONDITIONING

B. F. Skinner is one of the most recognizable names in modern psychology, and with good reason. Though many of his experiments seem outlandish, the science behind them continues to impact us in ways we rarely think about.

The most prominent example is in the way we train animals for tasks such as search and rescue, companion services for the blind and disabled, and even how we train our furry friends at home—but the benefits of his research go far beyond teaching Fido how to roll over.

Operant conditioning research has found its way into the way schools motivate and discipline students, how prisons rehabilitate inmates, and even in how governments handle geopolitical relationships.